Well, as it turns out, our friend ChatGPT isn't as perfect as we'd like to believe. The brainchild of OpenAI, this virtual chatbot has taken the world by storm, revolutionising communication and automating customer support. But let's pull back the curtain a bit and expose the cracks.

So, are you ready for a fun, cheeky insight into the not-so-slick side of AI? Let's dive in, shall we?

1. Contextual Slip-Ups

Firstly, and most significantly, ChatGPT can struggle to maintain a consistent and coherent conversation over a long period. It can forget the context or go off on tangents, leaving users befuddled and mildly entertained (depending on the seriousness of the conversation, of course). This short-term memory is a stark limitation, especially in tasks that require a lengthy dialogue or data recall.

For example, it might refer to your Aunt Sheila in one sentence and forget her in the next. You start a chat asking ChatGPT, "I have an aunt Sheila who's allergic to peanuts. What should I prepare for dinner?" The AI might suggest a peanut satay, completely disregarding the mentioned allergy from a previous line. It’s like Aunt Sheila’s peanut allergy has suddenly evaporated into thin air!

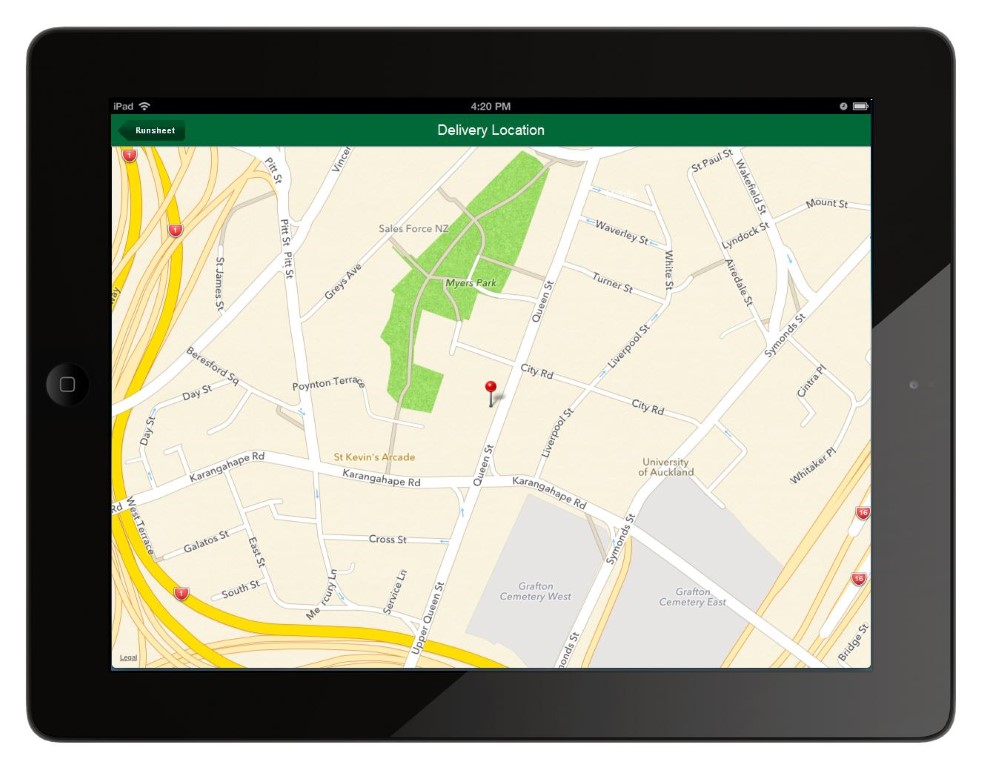

ChatGPT recognises natural language and provides informed answers, but with limitations.

2. Confusion Over Controversial Topics

Secondly, dealing with controversial topics can throw ChatGPT off. It's like watching a hot potato being thrown around—no one wants to hold it for too long! The model tends to play it safe by avoiding a stand, or it might generate information that is overly neutral and thus, may seem nonsensical or vague.

Suppose you ask ChatGPT about its opinion on a hot-button issue like Brexit. Instead of providing a detailed analysis of the pros and cons, it might generate a response along the lines of, "Brexit is a complex topic with many different opinions," leaving you none the wiser and definitely not enriched with substantial knowledge.

3. Unintentional Spread of Misinformation

Thirdly, ChatGPT isn't perfect at fact-checking. Its responses are generated based on patterns it has learned, and not on actual, real-time data. It can inadvertently spread outdated or false information, believing it to be accurate.

Let's say you ask about the current Prime Minister—it could potentially name the one from its last training period, making for an awkward political blunder.

It is also important to remember that ChatGPT can get even seemingly simple topics wrong because it is a statistical model reliant on the information that fed it. For example, when asked about the average rainfall by month in Bangkok, it got the numbers wrong on several attempts.

4. Inappropriate Responses

Fourthly, though OpenAI has implemented safety measures to reduce harmful or inappropriate outputs, there are instances where ChatGPT can generate content that's not quite kosher.

For instance, if a user asks for 'exciting stories', the AI might generate a thrilling tale that includes elements of violence, which could be seen as unsuitable for young audiences.

5. Creativity Gone Wrong

Lastly, ChatGPT can get a little too creative for its own good. As it generates responses based on patterns and probabilities, it can sometimes produce nonsensical or overly fantastical statements. If you've ever found yourself in a conversation about alien invasions or flying elephants, you know what I'm talking about.

Now, these are the glitches we've spotted. But it's worth considering—how many more are we missing? This is the burning question that keeps AI researchers and developers up at night. After all, as with every technology, AI has its limitations.

So what's the takeaway here, friends? AI, as represented by ChatGPT, is a powerful tool, one that's transforming the way we communicate, work, and even think. But it's far from perfect. It's critical to understand its limitations and potential pitfalls to use it.